Introduction

The world’s computing backbone is quietly undergoing a paradigm shift from serial to parallel processing, driven largely by the rise of artificial intelligence. Unlike the single-threaded CPU era, today’s data centers are dominated by massively parallel processors that can tackle many operations simultaneously [1]. This transition has enabled breakthroughs in machine learning, but it also brings unprecedented demand for computational power.

According to Bain & Company, by 2030 AI providers may need around 200 gigawatts of additional compute capacity and roughly $2 trillion in annual revenue to fund it. Even assuming efficiency gains, that leaves an $800 billion shortfall versus projected demand [2]. In short, AI’s appetite for computing is outpacing our ability to pay for and power it, straining budgets and power grids alike.

GPUs Take Center Stage in AI

From cloud data centers to research labs, GPUs (graphics processing units) have become the workhorse for AI. Their ability to perform thousands of calculations in parallel makes them ideally suited for training large neural networks and running inference on massive models. As one analyst explains, “the massive computing requirements of AI workloads require thousands of times more computing resources than traditional applications.” That’s why the industry shifted “away from the serial computing of CPUs toward the parallel computing that is taking over the data center with the rise of GPUs”. By breaking problems into many small pieces that run concurrently, GPUs have slashed AI training times from months or years down to days or weeks. This parallel processing revolution is transforming infrastructure and enabling today’s AI boom.

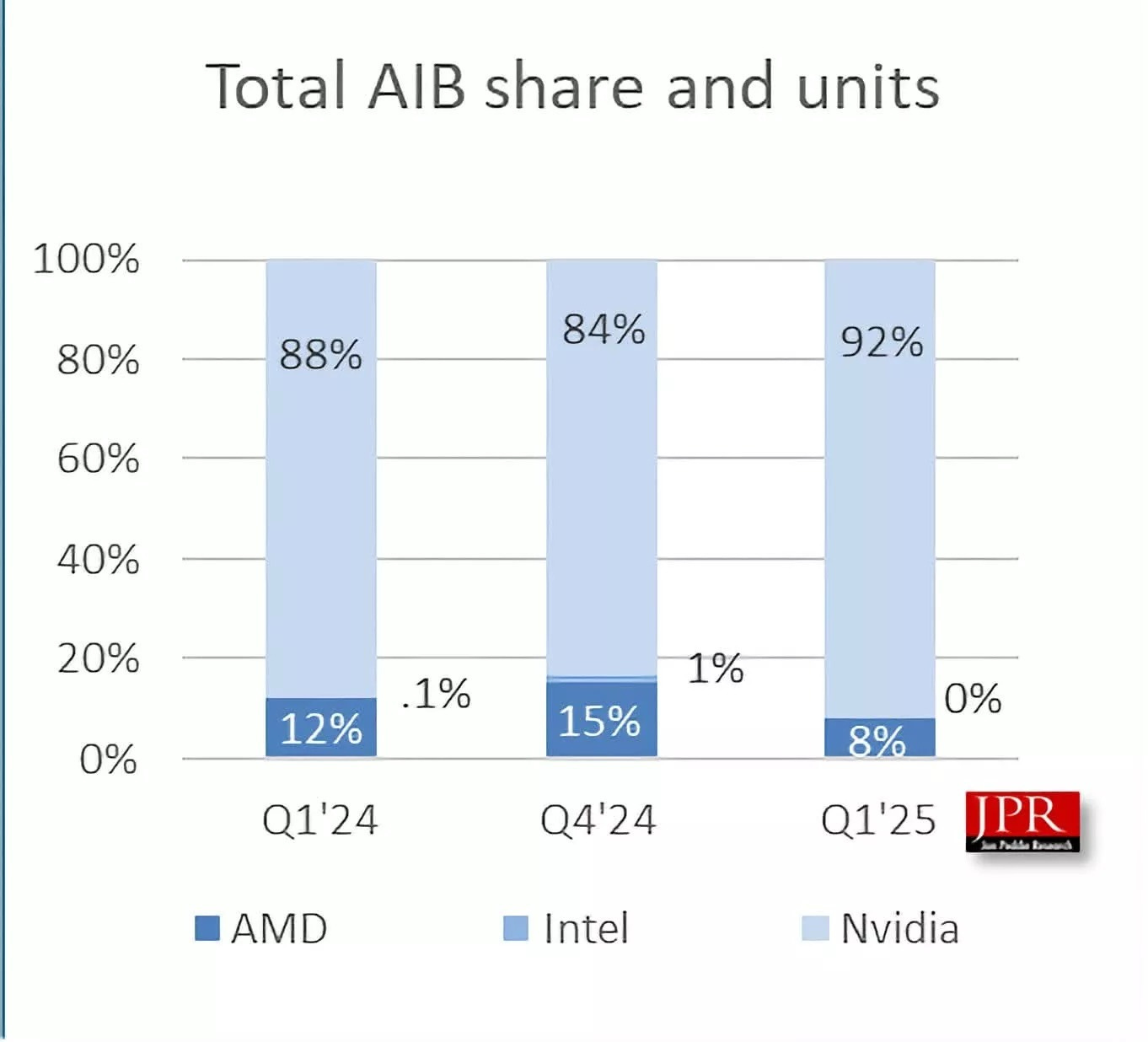

However, the dominance of GPUs also concentrates both technological and market power. Nvidia, which pioneered GPU computing for AI, has achieved a de facto monopoly in high-end AI chips that is estimated at over 70% market share in AI acceleration [3]. Nvidia’s latest flagship chips like the A100 and H100 have become essential infrastructure for leading AI firms, allowing Nvidia to command premium prices and margins.

This has created both opportunities and tensions:

For Nvidia, it fuels trillion-dollar market capitalization and unmatched influence in AI hardware.

For users, it raises concerns about “golden handcuffs” in the industry, with many feeling “locked in” to a costly, single-vendor ecosystem [4].

For the broader industry, it has triggered countermeasures aimed at loosening Nvidia’s grip.

This has fueled Nvidia’s market capitalization past the trillion-dollar mark, but also raised concerns about “golden handcuffs” in the industry, where users feel locked into an expensive, single-vendor ecosystem. These dynamics are now prompting responses across the tech landscape, all aimed at supporting AI’s growth while loosening Nvidia’s grip.

Insatiable Demand Meets Economic Reality

The surge in AI activity has set off an investment super-cycle in compute infrastructure, but the numbers are daunting. As mentioned, Bain & Company’s 2025 Global Tech Report projects that meeting anticipated AI demand by 2030 would require about $2 trillion in yearly revenue and roughly $500 billion in annual data-center capital expenditures.

Even after accounting for efficiency gains and cost savings from AI, the world would still be about $800 billion short per year of what’s needed [2]. In other words, the economics don’t yet add up: the pace of AI adoption is outrunning the financial and physical capacity of companies to build and power enough hardware.

This shortfall is echoed by other analysts. McKinsey, for example, estimates that in a mid-case scenario roughly $5.2 trillion of data-center investment will be needed by 2030 just for AI workloads, requiring 125–205 GW of new capacity (the equivalent of dozens of new hyperscale facilities) [5].

In a faster-growth scenario, the investment could reach $7–8 trillion by 2030. The reasons include not only the sheer compute required for ever-larger AI models, but also ancillary costs (power generation and grid upgrades, cooling systems, networking gear, etc.) Bain notes that because AI compute demand is growing at more than twice the pace of Moore’s Law, it’s straining supply chains and utilities that haven’t expanded at that rate [2]. In short, we are entering an era where scaling AI will depend as much on infrastructure finance and energy capacity as on algorithmic innovation. This reality is forcing tough questions:

How will we fund the “arms race” for AI compute?

Where will the electricity come from?

Can new technologies or architectures bend the cost curve before constraints stall progress?

Next-Gen GPUs and Cloud Economics

Ironically, even as AI drives unprecedented demand, the cost dynamics of compute are in flux, especially in the cloud. The latest generations of AI accelerators (like Nvidia’s current “Hopper” H100 and upcoming “Blackwell” GPUs) deliver record performance, but at staggering prices, often tens of thousands of dollars per chip, or $5–$10 per hour in cloud rentals at launch.

Yet these prices are not static. In fact, cloud providers have begun rapidly cutting the cost of GPU instances as supply catches up and competition increases. For example, Amazon Web Services (AWS) announced in mid-2025 significant price reductions for its GPU cloud instances (P4 and P5) powered by Nvidia A100, H100, and even the newer “H200” GPUs [6]. AWS attributed the cuts to economies of scale and declining hardware costs, noting that historically “high demand for GPU capacity… outpaced supply, making GPUs more expensive to access”. Now, with greater availability, those prices are coming down.

One industry CEO illustrated how extreme this swing has been: “A year ago, an H100 was maybe $5–6 per hour. Now it’s around 75 cents, maybe less.” In other words, cloud GPU rental costs dropped by ~80% within 12 months. Such a rapid depreciation is almost unheard of for cutting-edge hardware. This sudden price collapse has dual effects:

Positive: It’s great news for AI startups and researchers who can suddenly afford more experimentation, democratizing access to high-performance compute.

Negative: It’s brutal for smaller cloud providers or less diversified firms that bought expensive GPUs hoping to rent them out, their potential revenue can evaporate far faster than the hardware depreciation cycle.

This dynamic reshapes the economics of AI: the largest players (like AWS, Google, Microsoft) can slash cloud AI prices to undercut competitors and attract workload, knowing they can weather the margin hit. Meanwhile, it pressures independent data centers and GPU cloud startups, who face hardware they leased at high cost now yielding much lower returns.

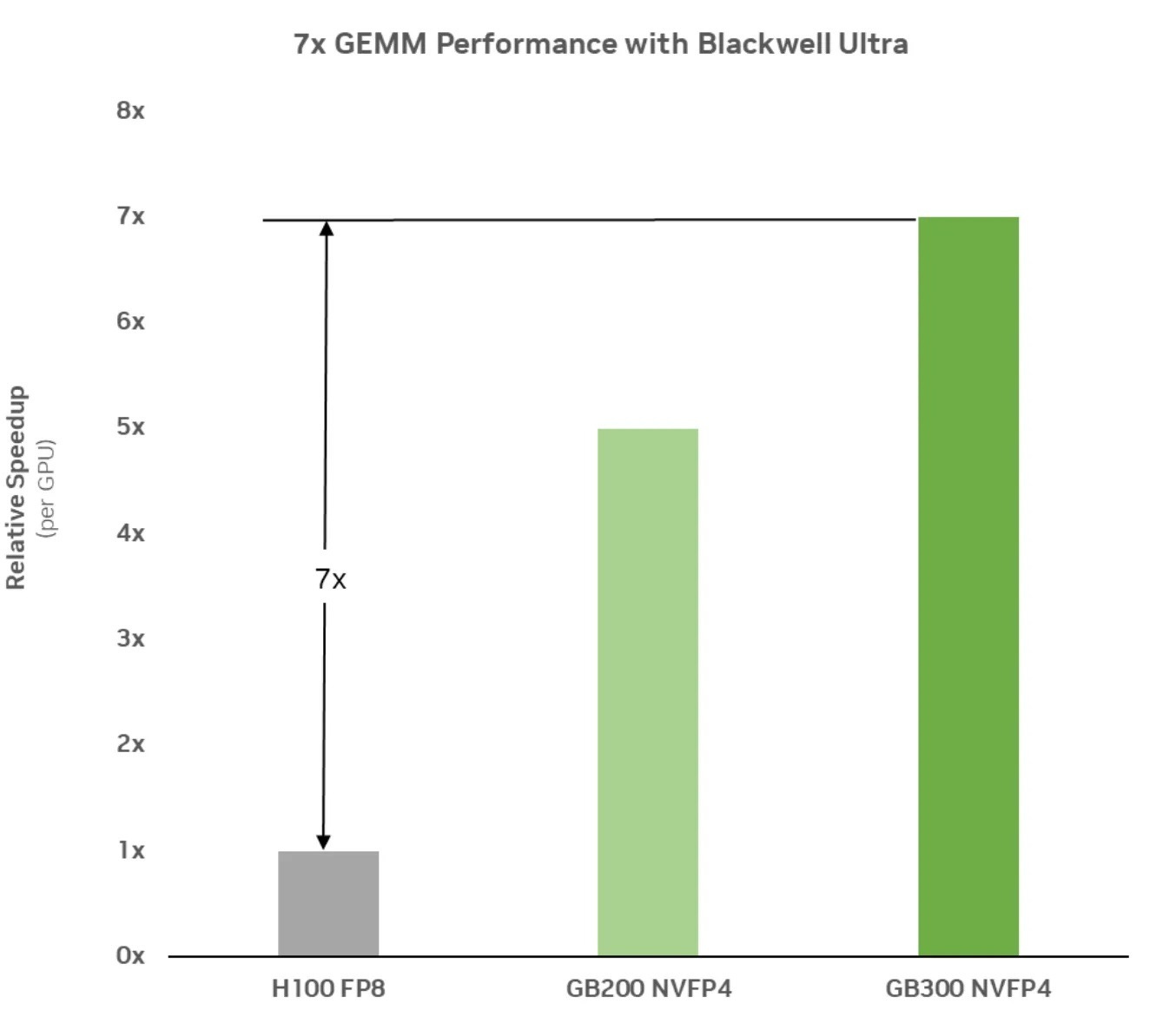

At the same time, Nvidia’s own strategy with new GPUs is evolving. The company’s upcoming Blackwell generation exemplifies how AI-centric design is altering value propositions. Blackwell GPUs reportedly trade off some traditional capabilities (like peak 64-bit floating-point throughput) in favor of sheer throughput on lower-precision AI tasks. In fact, Nvidia’s FLOPS numbers have “exploded” by trading precision for performance:

Low-precision AI tasks (FP4): ~20 petaFLOPS per chip, a huge number optimized for neural network operations

Double-precision HPC (FP64 vector): Only ~45 teraFLOPS, adequate but not industry-leading [7]

Matrix FP64 operations: Blackwell’s FP64 performance is actually a slight regression compared to the previous H100 generation.

In contrast, rival AMD’s MI300X series GPUs emphasize 64-bit compute for HPC, with one variant hitting 81 TFLOPS vector and 163 TFLOPS matrix FP64. Nvidia’s bet is that for the bulk of AI (which can tolerate lower numerical precision), it’s better to maximize throughput per chip (even using 8-bit or 4-bit math), and incorporate just “good enough” double-precision to satisfy the subset of HPC customers. By “trading precision for performance,” Nvidia caters to its largest market (AI) and yields astonishing performance metrics, but it leaves a potential gap in the pure HPC space for competitors to target.

The upshot is twofold: cloud AI computing is getting cheaper per operation, yet the absolute cutting edge in performance remains expensive and technically complex (e.g. Nvidia’s highest-end systems). We see a bifurcation: mainstream enterprises can now access mid-tier AI hardware at lower cost, while elite labs still chase record-breaking systems with sky-high budgets. It’s a dynamic similar to other tech cycles (think of commercial airplanes vs. fighter jets). For the AI industry, this raises strategic considerations around price/performance sweet spots and how broadly advanced AI capabilities will diffuse.

Bottlenecks: Bandwidth and Power at Scale

As AI models and hardware scale up, it’s not just compute FLOPs that matter. Data movement has become a critical bottleneck. Feeding these hungry accelerators with data (and moving results around) is often the limiting factor to realized performance. In fact, in many AI systems today, GPUs are underutilized not because they lack raw compute, but because of two key constraints:

Memory and Networking: The I/O subsystems are “still playing catch up,” preventing the GPU from being fully leveraged [1].

Synchronization: An Nvidia research revealed that serving big models efficiently demands enormous memory and bandwidth [8]. Key findings included:

Hundreds of GB of memory per server are needed for a single large model instance;

High memory bandwidth is critical for high throughput per user; and

If communication latencies between chips aren’t kept to microsecond levels, fast memory doesn’t translate to performance.

In short, you can’t just throw more raw compute at LLM inference. You need to solve memory and synchronization bottlenecks too. The study also noted that DRAM-based designs have a fundamental efficiency advantage in throughput per dollar or watt for these workloads, implying that memory-rich architectures can outperform purely compute-dense ones for inference.

All this suggests economies of scale in AI, but not only the obvious one of spreading fixed costs. There’s a technical economy of scale: larger unified memory pools and faster interconnects (which often come from bigger devices or tightly-coupled multi-chip modules) can achieve higher utilization than many small, distributed chips.

This is one reason we see approaches like wafer-scale processors (Cerebras) or massive GPU modules with terabytes of shared memory: they attack the memory bandwidth and latency constraints directly by keeping more of the model “on-chip” and minimizing off-chip data movement. Of course, building such hardware is extremely challenging, and not every workload needs that approach. But for the largest models, the memory wall looms large.

There’s also the literal wall: power and cooling. High-end AI datacenters now push power densities that test the limits of facilities. Providing 10 times as much power (and equivalent cooling) per rack for AI systems compared to a traditional server cluster is becoming common [1]. Many sites have had to adopt liquid cooling for the first time to dissipate heat from tightly packed GPUs. Even securing enough electricity from the grid can be a hurdle.

Bottlenecks such as memory, networking, power, cooling, and even regulatory approvals for new data center builds mean that scaling up AI is not just a chip problem but an infrastructure integration problem. Solutions are coming (HBM3 memory, optical interconnects, advanced cooling, etc.), but each adds cost and complexity. This is the engineering reality underlying the sky-high investment figures: it’s not just more of the same, but new technology layers needed to keep performance growing.

Startup Struggles in the Nvidia Era

With AI compute demand surging, one might expect a golden age for chip startups. Indeed, over the past five years, dozens of ambitious startups sprang up to build novel AI accelerators. They often promised better performance or efficiency than Nvidia by tailoring designs specifically to AI. However, the first wave of these companies has encountered a harsh truth: Nvidia’s dominance isn’t just technical, it’s ecosystem and trust.

Breaking into this market has proven far harder than anticipated, and many startups are “hitting a snag” as major customers stick with the devil they know.

Consider Graphcore, one of the early unicorns in this space. The British startup’s Intelligence Processing Unit (IPU) garnered lots of hype and venture funding. But by 2023:

It reported only $2.7 million in revenue and a pre-tax loss of $204 million [9].

The company announced a 20% staff cut and admitted it needed new capital to survive.

Industry observers pointed squarely at Nvidia’s grip on the market, which had “chilled funding” for challengers.

Investors are reluctant to bet big on an unproven chip when Nvidia’s ecosystem (CUDA software, developer community, existing user base) creates a high barrier to entry. As Reuters succinctly put it, Nvidia’s dominance has made it more difficult for startups to access capital, as few want to take on Nvidia head-on. Graphcore isn’t alone, many AI chip startups have had to downsize ambitions or pivot to niche markets.

Another headwind: the very customers who might champion alternatives have mostly doubled-down on Nvidia, at least in the near term. Meta (Facebook), for example, has publicly discussed reducing its reliance on Nvidia by developing custom silicon, but those projects take time. In 2025 Meta was testing an in-house “MTIA” accelerator for AI training and inference, aiming for deployment by 2026 [10]. Yet Meta simultaneously remains “one of Nvidia’s biggest customers”, reportedly investing vast sums in Nvidia GPUs to power its AI labs and products. Meta even scrapped an earlier internal AI chip effort after poor results, reverting to commercial hardware. This pattern of big players exploring their own chips but relying on Nvidia in the interim means startups struggle to land marquee deals. If a Meta or Google is going to buy fewer Nvidia GPUs, it’s likely because they built their own (like Google’s TPUs or Amazon’s Trainium), not because they chose a third-party startup’s chip.

All is not lost for the challengers. Those focusing on specialized niches, like inference acceleration or low-power edge AI, have better odds than going directly at Nvidia’s training stronghold. For instance, Groq (a startup focused solely on AI inference) secured a $1.5 billion commitment from Saudi Arabia to supply chips for the kingdom’s AI initiatives [11]. Such deals can give startups lifelines to scale production. Furthermore, as the AI compute market grows, it may simply become too large for one company to satisfy every need, leaving room for multiple winners in different segments (much as Intel, AMD, and others coexisted in CPUs).

For now, though, the story of first-generation AI chip startups is mostly cautionary. High-profile players like Graphcore have struggled to justify valuations, and even those with technical wins find the market adoption slow. The “incumbency advantage” of Nvidia is enormous. One venture investor likened trying to beat Nvidia now to “trying to beat Microsoft in PC operating systems in the 1990s”. Although it is not impossible, it is a very steep climb. This has tempered some of the initial excitement and forced startups to refine pitches: instead of “we’ll beat Nvidia now,” it’s often “we have a specific angle (cost, efficiency, integration) that complements or eventually augments the dominant platforms.”

Escaping the CUDA Lock-In

While Nvidia’s hardware gets most of the spotlight, an equally important part of its dominance is software. The CUDA programming platform and its associated libraries have become the default for AI development, effectively locking many users into Nvidia GPUs. Recognizing this, the industry has been pushing for open, vendor-neutral alternatives to avoid being hostage to a single vendor’s ecosystem. One major effort is SYCL and oneAPI, spearheaded by the Khronos Group and Intel. These are open standards intended to let developers write code portable across GPUs, CPUs, and other accelerators, without proprietary languages.

Intel’s oneAPI (built around SYCL, which is like “C++ for accelerators”) explicitly aims to “free software from vendor lock-in.” Its key features include:

A unified programming model for multiple architectures – CPUs, GPUs, FPGAs, and ASICs.

Open-source availability and cross-vendor support (with contributions from ARM, Xilinx, and others).

Standardized abstractions for compute kernels and memory that can map to any backend, not just Nvidia’s CUDA.

As Intel’s VP of software Joe Curley explained, oneAPI’s value is that enterprises can choose the best hardware for a job “without getting locked into any one brand because of the programming model” [12]. The model defines abstractions for compute kernels and memory that can map to different devices, so code isn’t hardcoded to, say, CUDA or TensorRT. OneAPI is open-source, with contributions from multiple companies (Xilinx, ARM, etc. participated in its advisory board). Essentially, it’s trying to do for heterogeneous computing what SQL did for databases by providing a common language so that switching the backend doesn’t require rewriting your entire application.

There are promising signs here: oneAPI/SYCL compilers have matured, and even Nvidia has (begrudgingly) shown some support for standardized parallel C++ (though CUDA remains their focus). Some supercomputers (like those in Europe and Japan) have embraced SYCL to hedge against Nvidia-centric development. The goal is that if tomorrow a new chip offers 2x performance but isn’t from Nvidia, developers could adopt it without re-coding from scratch and reducing vendor lock-in as well as fostering competition. “Freedom to use whatever hardware accelerator they want” with minimal switching costs is the promise.

However, achieving this vision is challenging. CUDA’s network effects (years of optimizations, a huge developer base, third-party integrations) won’t be matched overnight. And ironically, companies like Intel face a dilemma: they want to promote oneAPI for the industry’s sake, but also hope it attracts users to Intel’s own GPUs and FPGAs.

Nonetheless, most experts agree that open standards are crucial for a healthy AI hardware ecosystem long term. Otherwise, innovation could stagnate around a single vendor’s priorities. We are likely to see increasing investment in such portability layers, including from governments wary of dependency on one company’s technology.

HPC vs AI: Divergent Needs, Converging Tools

It’s worth noting that “AI chips” aren’t the only game in town. Traditional high-performance computing (HPC) has its own demands, which sometimes conflict with AI trends. The core divergence lies in their fundamental hardware requirements:

HPC Needs Precision: For scientific codes, 64-bit (FP64) arithmetic is non-negotiable for numerical accuracy and stability.

AI Prioritizes Speed: Neural networks often thrive with lower precision (FP16, BF16, INT8), trading some numerical exactness for vastly higher throughput.

This means HPC practitioners prioritize a chip’s FP64 FLOPS, while AI engineers focus on FP16/FP8 performance and memory bandwidth [13].

For a long time, Nvidia catered to both in separate product lines (e.g., Tesla and Quadro GPUs with strong FP64 for HPC, versus inference accelerators with limited FP64). More recently, these worlds are converging somewhat. Nvidia’s latest data-center GPUs include mixed-precision Tensor Cores that accelerate FP64 as well, and HPC centers are exploring AI techniques.

Yet, the tension remains. As noted earlier, Nvidia’s newest Blackwell GPUs show a “mixed bag” on double precision: one can interpret that as Nvidia prioritizing AI workloads (with massive low-precision performance) at some cost to peak FP64. HPC users might view this as a step backward for their needs, even as AI users celebrate the speedups. AMD has seized on this by touting their MI300 series’ superior FP64 numbers, hoping to win HPC deployments that are less AI-centric.

The industry is also redefining benchmarks to reflect this convergence. New metrics such as HPL-AI, a mixed-precision variant of the LINPACK Top500 test, capture performance for hybrid AI-HPC workloads. Early results show:

Mixed-precision solvers can achieve 10–15× speedups over pure FP64 with minimal loss of accuracy [14][15].

Techniques like iterative refinement and the Ozaki method allow low-precision calculations to reach high-precision outcomes [16][17].

These innovations signal a future where AI and HPC borrow from each other’s playbooks. AI hardware gaining more precision, and HPC codes leveraging AI methods to accelerate simulations.

Nonetheless, for now HPC and AI remain partially distinct markets. Top supercomputers are still ranked by FP64 FLOPS; national labs still require chips that can run legacy codes with full precision. So, the ideal processor would deliver everything, but economic and physical realities force trade-offs. Companies must decide which metrics to optimize. In 2024–2025, Nvidia chose to maximize AI throughput (perhaps betting that any lost HPC share is a small price, or that HPC will adapt to mixed precision). AMD and Intel seem to be positioning with more balance (their GPUs and upcoming chips like Intel’s Falcon Shores are advertised as serving both HPC and AI well). The next few years will show whether “good enough FP64” is acceptable to HPC users in exchange for AI performance, or if a bifurcation persists.

New Challengers: Alternative AI Chips Proliferate

Despite the challenges for startups, the push for alternative AI hardware is far from over. In fact, the landscape of accelerators is more diverse than ever, with big tech companies and startups alike offering new chips that promise better performance or value for specific AI workloads. Here we highlight a few notable contenders making waves:

Intel’s Habana Gaudi2

Intel entered the AI accelerator fray by acquiring Habana Labs in 2019, and its Gaudi2 processor (7 nm, launched 2022) is aimed squarely at data-center training and inference. For a while, Gaudi flew under the radar, but recent independent tests have been eye-opening. In early 2024, researchers at Databricks benchmarked Gaudi2 against Nvidia’s A100 and H100 GPUs, and the results showed Gaudi2 held its own impressively.

For large language model inference, Gaudi2 matched the latency of Nvidia’s flagship H100 on decoding tasks and actually outperformed Nvidia’s older A100 [18]. In training, Gaudi2 achieved the second-fastest single-node LLM training throughput (around 260 TFLOPS per chip on BF16), only behind the H100 and it also beat the A100 by a good margin. Crucially, when cost is considered, Gaudi2 offered the best price-performance for both training and inference among the devices tested.

In other words, for each dollar spent on cloud instances, Gaudi2 delivered more AI work done than A100 or even H100 in those tests. Intel has touted these findings as validation that its solution is a “viable alternative” to Nvidia. With Gaudi3 coming in 2024 (expected to quadruple Gaudi2’s performance), Intel is clearly intent on carving a piece of the AI compute market. The challenge will be software i.e., getting developers to optimize for Intel’s architecture (which uses its own software stack, though PyTorch and TensorFlow support it via plugins). If they succeed, Intel’s AI chips could pressure Nvidia on price and appeal to cost-sensitive cloud providers or enterprises.

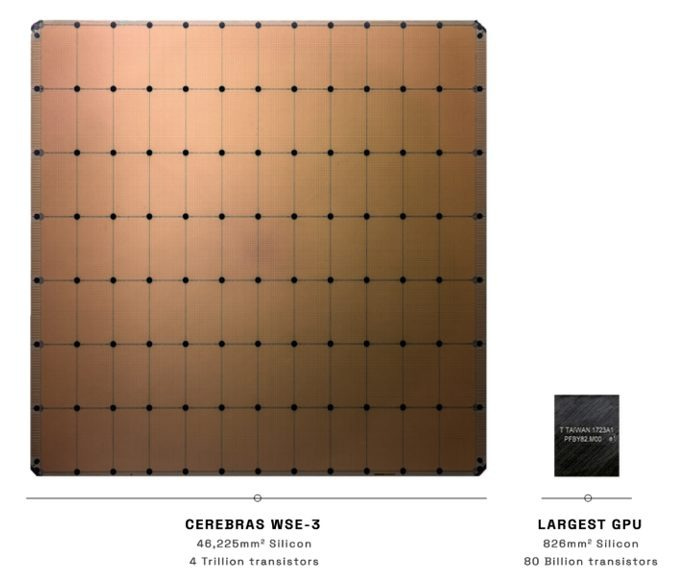

Cerebras Wafer-Scale Engine

Perhaps the most audacious approach is taken by Cerebras Systems, which designed the largest chip ever made, essentially an entire silicon wafer etched as one gargantuan “chip” with 850,000 cores and now over 2.6 trillion transistors (in its 2nd generation). In 2024, Cerebras announced a new AI inference system and claimed performance leaps that turned heads: reportedly 10× to 20× faster inference on certain large models compared to Nvidia’s top GPU (the H100) [3].

For instance, Cerebras said its technology could serve the 70-billion-parameter Llama model at up to 450 tokens/second, vs. around 30 tokens/sec on an H100 [19]. They achieve this by fitting entire models (even multi-billion parameter ones) into the WSE’s huge on-chip memory, eliminating the usual latency of sharding models across many smaller GPUs. It’s a bold strategy that yields blistering speed for batch inference, though at the cost of an exotic form factor and likely high expense upfront.

Cerebras has found some success, securing large deals like a partnership with G42 in the UAE and engaging labs that need massive models. The company filed for an IPO in late 2024, revealing some financials: about $136 million in first-half 2024 revenue but also a $66 million loss in that period. The IPO prospectus also noted Cerebras was highly dependent on a single customer at that time, reflecting the reality that wafer-scale systems are a niche, high-end product (so far). Cerebras’s future will hinge on whether ultra-large model training/inference becomes common enough to support a healthy market, and whether they can drive costs down over time. But as a technology feat, they’ve proven the concept of wafer-scale AI, forcing the industry to rethink what a “compute node” can be.

Groq’s Inference Engine

Among the startups, Groq has gained attention by focusing exclusively on AI inference (running trained models) rather than training. Groq’s founders created a novel chip architecture they dubbed the Groq LPU (Tensor Streaming Processor), optimized for predictable low-latency execution of AI models. Groq’s strategy is to provide high throughput at minimal latency and power for inference, making it attractive for real-time AI services.

The company offers its tech both as on-premise servers (GroqNodes) and via cloud APIs, supporting popular models from OpenAI, Meta, etc., on its chips [20]. Groq boldly claims its system can match or exceed GPU performance at significantly lower cost for many inference tasks. Its CEO Jonathan Ross argues that “inference is defining this era of AI, and we’re building the infrastructure to deliver it with high speed and low cost”.

Investors are buying in: in September 2025 Groq announced a $750 million funding round at a $6.9 billion valuation, more than doubling its value in a year. Notably, Groq has backing from major funds and even strategic support (the round included firms like BlackRock, as well as earlier investments from the likes of Samsung and Cisco). The company also struck a significant deal with Saudi Arabia to supply chips for AI initiatives there.

Groq’s challenge will be scaling its customer base (it reported powering some 2 million developers’ AI applications, up from 350k a year prior) and competing against not only Nvidia but also cloud-provider chips in the inference market. But with inference workloads exploding thanks to the deployment of generative AI in products, Groq is well positioned in a critical and growing segment. If it can truly deliver “GPU-level performance at lower cost,” that bodes well for AI practitioners seeking cost-efficient deployment options.

Amazon’s Trainium and Inferentia

Among the big cloud firms, Amazon Web Services (AWS) has been particularly aggressive in developing in-house AI chips to reduce reliance on Nvidia and offer cheaper AI compute to customers. AWS’s Inferentia chips (first launched in 2019, with Inferentia2 in 2022) target inference, while the Trainium chips (launched 2021, with Trainium2 on the horizon) target training and heavy-duty workloads. Amazon’s pitch is straightforward: comparable performance for much lower cost.

In early 2025, news emerged that AWS was directly pitching Trainium-based instances as 25% cheaper than Nvidia H100 instances for the same compute power. In one case, an AWS customer was told they could get “H100-level” training capability at three-quarters the price by using Trainium servers. For cost-conscious companies or those struggling to even obtain Nvidia GPUs (which have been in short supply), this is compelling. “AWS is mitigating the constraint of access to GPUs for AI” by offering its own chips, said one analyst, and keeping cloud AI interest high by lowering the barrier to entry.

All major cloud providers have similar aims, but AWS has been one of the most public about it. They’ve had success with prior custom silicon like the Graviton CPU, and now with Inferentia and Trainium they boast “cost-effective alternatives that are also performant for AI”, inserting themselves into the AI conversation for enterprise clients.

It’s important to note that Amazon’s chips don’t necessarily beat Nvidia on absolute performance. In fact Nvidia still “raises the bar” at the high end, and analysts agree “no one’s displacing them anytime soon” for the most demanding training workloads. But not every AI task needs the most powerful (and expensive) GPU. Many applications involve “simpler inferencing tasks” or smaller-scale training where a more modest chip can do the job at lower cost. AWS is “democratizing AI” by filling that space under Nvidia’s top tier.

For enterprises new to AI, AWS’s lower-cost instances mean they can experiment and prototype without huge capital or long wait times (some reported waiting 18 months to get Nvidia hardware due to shortages). The trade-off might be a bit of vendor lock-in (Trainium is only on AWS, and using it means adapting to AWS’s Neuron SDK), but many will find the cost savings worth it. In essence, Amazon is doing to Nvidia in AI what it did to Oracle in databases years ago, offering a cheaper cloud-based alternative that’s “good enough” for a wide swath of users, thereby undercutting the incumbent’s pricing power from below.

Outlook: An Expanding, Evolving Hardware Ecosystem

The picture that emerges is one of a rapidly expanding and evolving AI hardware ecosystem. Nvidia remains at the forefront, continuing to innovate (with technologies like AI-optimized HPC software, mixed-precision frameworks, and ever-more-powerful GPUs) and likely to dominate the high end for the foreseeable future [4]. But around that apex, a richer tapestry of compute options is taking shape:

Cloud providers are rolling out their own silicon and specialized instances, ensuring that AI compute isn’t scarce (or exorbitantly expensive) for the average user. This will broaden access to AI capabilities across industries as the “entry fee” comes down.

Startups and new players are targeting niches and some are achieving breakthroughs that either outperform legacy approaches or drastically cut costs. Not all will survive, but those that do (like Groq, potentially) will force incumbents to stay sharp and possibly collaborate (e.g., via acquisitions or partnerships).

Open standards and software are gradually eroding proprietary lock-in. Initiatives like oneAPI, as well as open-source frameworks that run on multiple backends (PyTorch XLA for TPUs, ONNX, etc.), mean the moat around Nvidia is not impregnable. The easier it becomes to port AI workloads across hardware, the more competition we’ll see on merits like price and efficiency.

Convergence of AI and HPC: AI techniques are being adopted in scientific computing, and conversely HPC’s demands (like reliability, precision) are influencing AI infrastructure. The next generation of supercomputers (exascale systems) often feature a mix of CPUs, GPUs, and AI accelerators all working together. This cross-pollination will likely yield hybrid chips and systems that are more capable across diverse workloads.

Ultimately, meeting the colossal compute needs of advanced AI will require every trick in the book including new chip architectures, new algorithms (to do more with less compute), huge investments in infrastructure, and smarter software to orchestrate it all. The “silent shift” to parallel processing has already reshaped data centers, but an even larger shift may be ahead: a move toward distributed AI infrastructure that spans specialized hardware, geographically dispersed clusters, and cloud-edge hybrids. In this scenario, no single hardware will reign supreme; instead, interconnects and software will knit together GPUs, TPUs, custom ASICs, and more into a cohesive platform.

One thing is certain: the AI compute revolution is just getting started. The past year showed both the power of having the right hardware (as GPT-4’s success rode on thousands of Nvidia A100s) and the pitfalls of constraints (as many found themselves unable to get GPUs for new projects). The coming years will be defined by how we as a global tech community scale our compute capabilities sustainably. It’s an exciting race, one that spans from nanoscale transistor innovations to gigawatt-scale power planning. And while the winners are yet to be crowned, the progress to date gives confidence that we’ll find ways to keep pushing AI forward, one parallel step at a time.

References

From Chips to Systems How AI is Revolutionizing Compute and Infrastructure | William Blair https://www.williamblair.com/Insights/From-Chips-to-Systems-How-AI-is-Revolutionizing-Compute-and-Infrastructure

$2 trillion in new revenue needed to fund AI’s scaling trend - Bain & Company’s 6th annual Global Technology Report | Bain & Company https://www.bain.com/about/media-center/press-releases/20252/$2-trillion-in-new-revenue-needed-to-fund-ais-scaling-trend---bain--companys-6th-annual-global-technology-report/

Red-Hot AI IPO Cerberas Claims Chips are 20X Faster Than NVIDIA (NVDA) - 24/7 Wall St. https://247wallst.com/ai-portfolio/2024/12/11/red-hot-ai-ipo-cerberas-claims-chips-are-20x-faster-than-nvidia-nvda/

Amazon undercuts Nvidia pricing by 25%, leveling market for simpler inferencing tasks | Network World https://www.networkworld.com/article/3848460/amazon-undercuts-nvidia-pricing-by-25-leveling-market-for-simpler-inferencing-tasks.html

The cost of compute power: A $7 trillion race | McKinsey https://www.mckinsey.com/industries/technology-media-and-telecommunications/our-insights/the-cost-of-compute-a-7-trillion-dollar-race-to-scale-data-centers

AWS cuts costs for H100, H200, and A100 instances by up to 45% - DCD https://www.datacenterdynamics.com/en/news/aws-cuts-costs-for-h100-h200-and-a100-instances-by-up-to-45/

Nvidia looks to shoehorn AI into more HPC workloads • The Register https://www.theregister.com/2024/11/18/nvidia_ai_hpc/

LLM Inference: Core Bottlenecks Imposed By Memory, Compute Capacity, Synchronization Overheads (NVIDIA) https://semiengineering.com/llm-inference-core-bottlenecks-imposed-by-memory-compute-capacity-synchronization-overheads-nvidia/

Losses widen, cash needed at chip startup Graphcore, an Nvidia rival, filing shows | Reuters https://www.reuters.com/technology/losses-widen-cash-needed-chip-startup-graphcore-an-nvidia-rival-filing-2023-10-05/

Meta has developed an AI chip to cut reliance on Nvidia, Reuters reports | Digital Watch Observatory https://dig.watch/updates/meta-has-developed-an-ai-chip-to-cut-reliance-on-nvidia-reuters-reports

Groq more than doubles valuation to $6.9 billion as investors bet on AI chips | Reuters https://www.reuters.com/business/groq-more-than-doubles-valuation-69-billion-investors-bet-ai-chips-2025-09-17/

Intel oneAPI zaps data center hardware vendor lock-in - SDxCentral https://www.sdxcentral.com/analysis/intel-oneapi-zaps-data-center-hardware-vendor-lock-in/

The Great 8-bit Debate of Artificial Intelligence - HPCwire https://www.hpcwire.com/2023/08/07/the-great-8-bit-debate-of-artificial-intelligence/

Do currently available GPUs support double precision floating point … https://scicomp.stackexchange.com/questions/6853/do-currently-available-gpus-support-double-precision-floating-point-arithmetic

Why comparing AI clusters to supercomputers is bananas - R&D World https://www.rdworldonline.com/hold-your-exaflops-why-comparing-ai-clusters-to-supercomputers-is-bananas/

Benchmarking Summit Supercomputer’s Mixed-Precision Performance https://www.hpcwire.com/off-the-wire/benchmarking-summit-supercomputers-mixed-precision-performance/

In This Club, You Must ‘Earn the Exa’ - HPCwire https://www.hpcwire.com/2024/10/17/in-this-club-you-must-earn-the-exa/

Exclusive: Databricks research confirms that Intel’s Gaudi bests Nvidia on price performance for AI accelerators | VentureBeat https://venturebeat.com/ai/exclusive-databricks-research-confirms-that-intel-gaudi-bests-nvidia-on-price-performance-for-ai-accelerators

Cerebras Launches the World’s Fastest AI Inference https://www.cerebras.ai/press-release/cerebras-launches-the-worlds-fastest-ai-inference

Nvidia AI chip challenger Groq raises even more than expected, hits $6.9B valuation | TechCrunch https://techcrunch.com/2025/09/17/nvidia-ai-chip-challenger-groq-raises-even-more-than-expected-hits-6-9b-valuation/