AI Productivity: What 133% Gains Really Mean

Exploring how companies are experiencing productivity gains from AI and uncovering the hidden trade-offs behind these numbers.

Introduction

SMEs report productivity gains of 27% to 133% by using AI.1 That’s over double the output in some cases without doubling the workforce, the hours, or even the budget. These are the kind of numbers that make AI feel more of a necessity than an option. But you’ll very soon see boosts like this often come with trade-offs that aren’t very obvious at first glance.

Now you might be wondering: how exactly does AI even connect to productivity gains for these startups? What AI are we talking about here?

It’s all about Generative AI, or GenAI for short. It’s a subfield of Artificial Intelligence that uses generative models to write articles, generate music, design images, and… even write codes.

So when you see tools like ChatGPT, GitHub Copilot or Claude writing an entire codebase from just a few lines of English, sweeping through your repository to find bugs and fixing them, generating test cases you didn’t even think of, that’s Generative AI in action.

You've likely heard the narratives, the bold predictions or seen the dazzling case studies, but here’s what we do know:

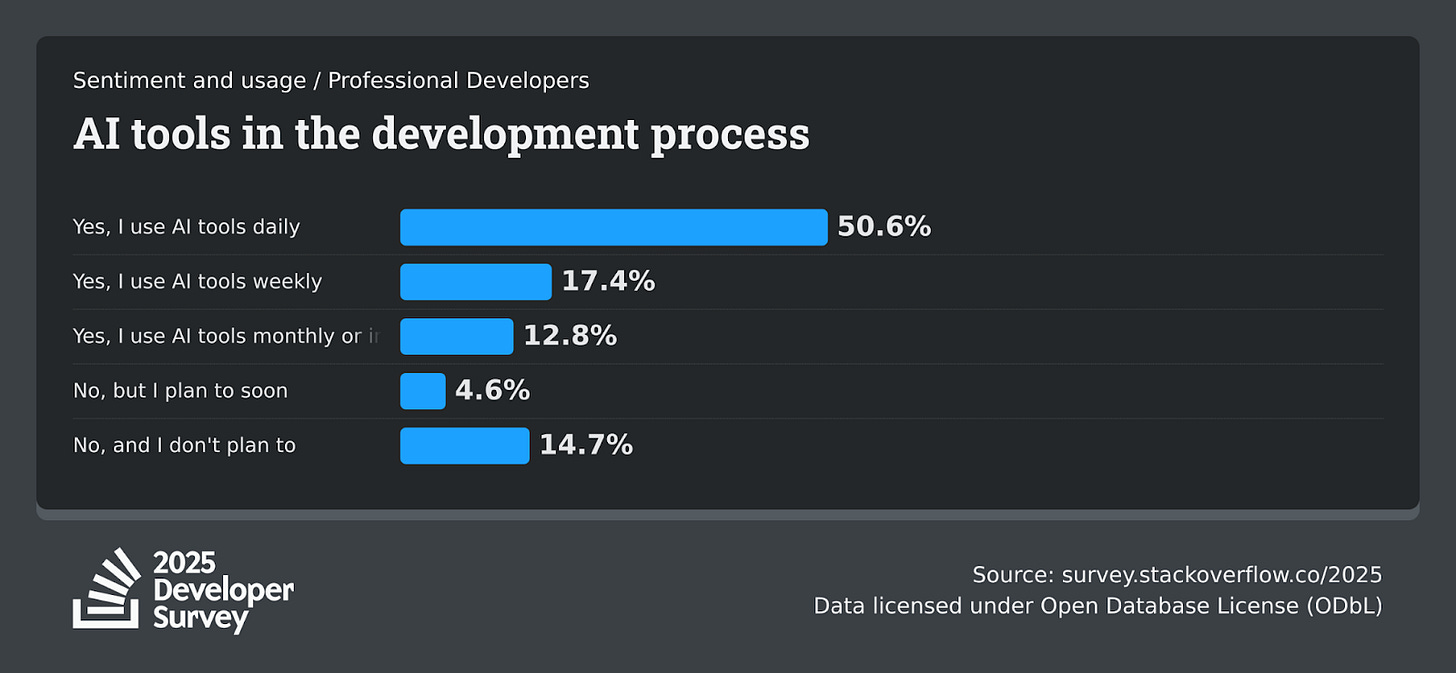

Over 85% of developers in 2025 are either currently using AI or planning to in their development process and workflows (an increase from last year’s 75%) …according to StackOverflow 2

97% of developers now use at least one of ChatGPT, Claude, Gemini or other No-Code tools and 29% of all developer code is generated by AI 3

On the productivity level, a study carried out on full–time Google engineers revealed a 21% shortened task-time with the use of AI 4

Image source: Stack Overflow 2025 Developer Survey survey.stackoverflow.co/2025

Quite frankly, these numbers don’t surprise you and me. In fact, I think it’s safe to say they’re expected given how fast they’ve grown into the hands of almost everyone in tech. What isn’t so common though are the deeper questions these numbers raise.

Are there hidden costs to the speed AI brings? Are we even self-aware of these costs? About 5 years ago before the AI Boom era, we had some level of productivity. With the introduction of AI, are there productivity gains, or does the productivity level remain a flat line?

Let’s answer these questions and find out if the immediate gains in usage of GenAI outweighs the long-term strategic implications and potential risks.

Benefits & Consequences

Faster Execution… but at What Quality Cost?

Speed is the very compelling headline everyone sees first. Tasks that take hours now take a couple of minutes. In seconds we have our drafts. Research works that took several weeks, months to compute are now summarized at your disposal. This definitely makes task execution faster and ultimately shrinks project timelines, but at what cost?

Let’s break down what “faster” looks like in a real startup work

In engineering, use-cases, test-cases can be quickly generated. You can move from a blank page to compiling code real quick.

In product and design, UI/UX drafts can be quickly generated. Even turning those drafts into components and generating variants saves a lot of time.

Boosts in marketing and sales as well. Briefs become posts, landing pages and even call scripts.

Support and operations aren’t left out. AI agents triage tickets, create knowledge base answers and propose fixes.

The productivity gains are obvious. More shots on goal, more experiments, more surface area covered by a small team. But let’s look at where quality quietly pays the bill. Quality here doesn’t just mean “no typos” in docs or generated texts, or syntax error in code. AI is actually pretty good at avoiding those errors. Quality here is:

Correctness: AI tends to hallucinate when it needs to make certain decisions especially when you haven’t fed it enough information. It also can generate you codes that run without errors, but misses edge cases or concurrency concerns.

Clarity: We often see verbose, pattern-matched code that hides intent. Or we see comments that explain what the code does but not why the decision was made.

Maintainability: AI doesn’t care much if the way it structures your code will last six months, let alone six years. It prioritises answering your question and giving a solution that works. But when your product pivots and a new engineer is onboarded, or you need to optimize for scale, the trade-offs become clear.

Faster execution feels exhilarating in the moment. Demos are great, MVPs are ready at the said time or even faster, board of directors see milestones met on time. But these speeds amplify small mistakes. More throughput means more opportunities for small errors to slip in. Team members working in parallel, concurrent deliverables can increase the chance of inconsistent patterns. It even promotes the pressure to skip checks because the output “already looks good”. And the team-leads may mistake velocity metrics for progress and stop asking the important hard questions.

The operating playbook therefore: keep the speed, protect the quality!

This involves asking the right questions and being self-aware of when speed is safe and when it is risky.

Always define quality before you start. If quality is not defined, it becomes difficult to guard it.

Use the Plan-and-Solve Prompting strategy when using AI to produce content. Ask the model to devise a plan that breaks the task into subtasks; then achieve each subtask based on that plan. It consistently outperforms one-shot Chain-of-Thought across benchmarks 5

Allocate quality budgets. Having a Quality Assurance engineer straight-up addresses this. But for startups unable to afford a QA yet this can be a challenge. Thus, it is vital to ship small, then spend a fixed window post-ship on stability and simplicity.

Put AI on double-check duty, not only to create stuff. Ask the model to propose edge cases and failure modes for the thing it just wrote. Have it generate negative tests, not just happy paths.

That is how you keep the speed and avoid the slow bleed that comes from silent quality loss.

The AI Generalist Trap: Doing Everything, Mastering Nothing

One of the most celebrated promises of AI in startups is opening up access to high-level skills. Today, we see marketers generating codes for landing pages, or a developer drafting a legal disclaimer or a compelling blog post. A founder can design a logo and create a sales deck all within the borders of ChatGPT.

AI acts as a force multiplier, erasing the traditional boundaries between roles and allowing small teams to operate at some level of expertise that was previously unaffordable. The advantage here is tremendous. Startups can move at an amazing pace, skill gap not being a barrier.

No More Bottlenecks: A product manager doesn't need to wait for a developer to tweak website copy; they can generate a great draft themselves. A developer can quickly script a data analysis without being a data scientist.

Idea Validation at Speed: Concepts can be prototyped and tested across domains. One person or two can design, copy and code in hours, not weeks. This allows for more experiments, more validated learning, and a higher chance of finding a more tailored fit for the product-market.

Talent Versatility: In a small team, this fluidity is a superpower. It allows team members to stretch beyond their core talents and contribute more holistically to the company's goals.

However, this newfound power has a dark side: it can create an illusion of mastery while quietly fading away the value deep, specialized expertise brings. A few are:

Outputs generated by AI are often competent. Very rarely will you find them exceptional. If your startup is full-time reliant on AI-generated response, you risk becoming a company that does everything at the “good enough” level, but not good enough to truly stand out. Your marketing copy is generic, your code is bloated, your designs lack a unique glow. 6

Very slowly, developers reliant on AI stop exercising the fundamental muscles of their craft. These complex problem-solving skills can fade away from lack of use.

If an AI-generated legal clause fails or a piece of marketing copy is misleading, who is accountable? The employee who prompted the AI but lacks the expertise to vet it properly? The startup often bears the risk for outputs it doesn't fully have the expertise to judge.

The goal isn’t to replace talents with AI, but to empower talents with AI to be more capable. The mindset shifts from trying to play every instrument yourself to expertly guiding and synthesizing the output of others, including AI. Startups can do this by:

Establishing a "Human-in-the-Loop" for Critical Work: Identify those critical operations. Core product code, financial models, down to public-facing brand messaging. These should always be either drafted or rigorously vetted by humans. Use AI for drafts, ideas, and rough cuts, but not final execution in these areas.

The value of AI is in collaboration. The marketer should use AI to generate 10 versions of ad copy, then use their expertise to synthesize the best elements into a final, on-brand masterpiece. The developer should use AI to generate boilerplates, but then refactor it to fit the specificity of their codebase rather than delegating the entire work to AI.

In short, use AI to break down barriers and unblock progress, but never let it convince you that broad competence can replace deep mastery. Your startup's ultimate competitive advantage will always be unique, human insight. AI is the tool that helps you apply it more broadly.

The Overall Organization Impact

We’ve highlighted certain pros and cons of AI gains to different individuals/teams in a startup, now let’s look at how it affects overall organizational goals. What happens when you amplify individual output without equally amplifying the organization's ability to absorb it? You create a scaling paradox: the tool that gives the productivity gains is reversed by the overwhelming reviews and quality assurance processes.

Now many startups don’t have dedicated QA Engineers yet, so they have the devs carrying this load. The same AI that helps developers write 3 times as much code in the shortest possible time also means they have thrice as much code to review.

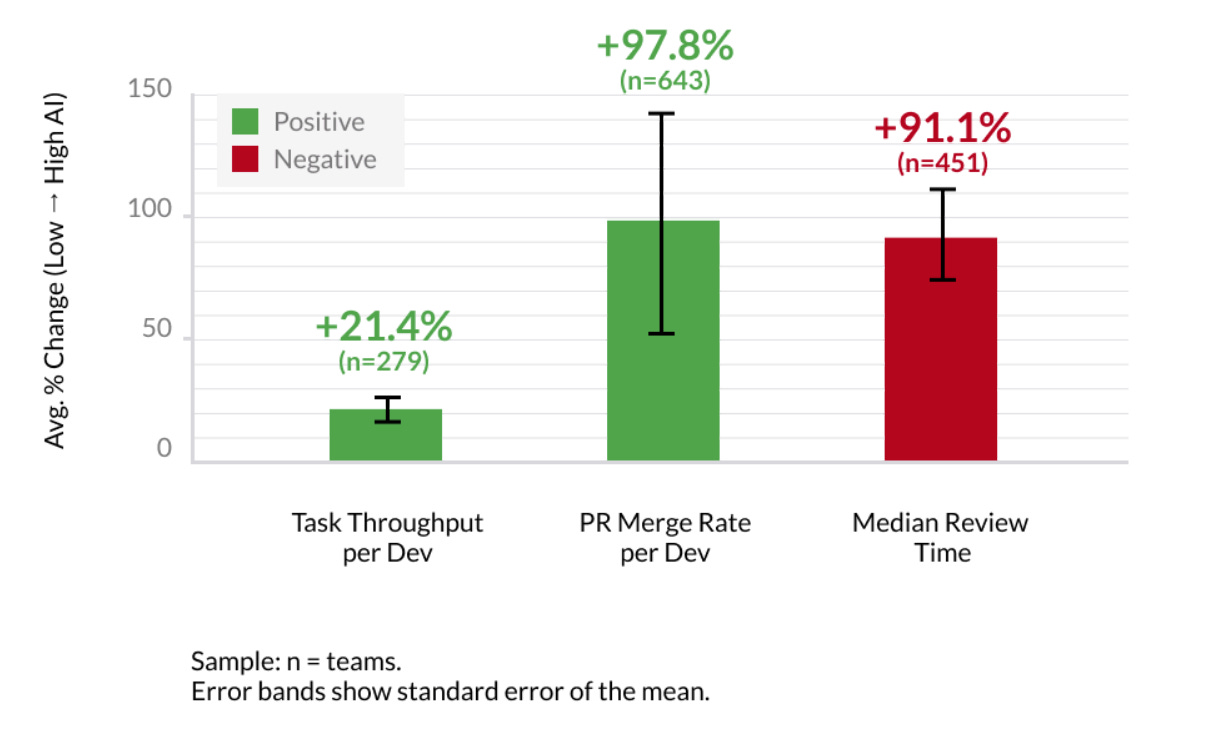

Image source: Faros AI – “The AI Productivity Paradox Report 2025” (July 2025).

The marketing team that can now generate ten campaign variants still needs human judgment to pick the right one. Teams find themselves either rushing reviews (introducing more bugs) or becoming review bottlenecks that slow everything down.

There’s also an uneven adoption problem.7 Your frontend guys might be generating views and pages at lightning speed, while your senior backend dev prefers the traditional approach. And since software products depend on many teams working together, the faster teams can’t fully realize their gains and AI ends up magnifying the gaps between teams

All these create a frustrating scenario for leadership. The true productivity gains AI brings are only effective when the entire workflow can progress to handle the new volume and complexities. And not generating way more results than the review process can handle.

The solution isn't to slow down developers; it's to scale the supporting infrastructure with the same AI-powered efficiency.

AI-Augmented Review, Not Just AI-Augmented Creation: Mandate the use of AI in the review process. Use tools that automatically scan change-requests for common issues, security anti-patterns, and style inconsistencies before a human ever looks at it. This elevates the human reviewer's role to evaluating architecture and intent, not just spotting syntax errors. 8

Automate the Testing Burden: Fight AI-generated complexity with AI-generated tests. Invest in tools that can auto-generate unit and integration tests, or at a minimum, surface areas of the codebase with poor test coverage. This helps manage the testing load that comes with increased output.

Redefine "Done": In an AI-powered workflow, "code written" is no longer the bottleneck. "Code reviewed, tested, and deployed" is. Stop measuring individual developer productivity by output volume. Instead, measure team productivity by throughput, such as how many features successfully and stably reach users. This aligns incentives with sustainable progress, not just code generation.

Embrace Tiered Reviews: For smaller, well-scoped changes, especially those generated by AI, empower a broader range of personnel to approve them. Don't let every change request wait for the same lead. This distributes the review load and accelerates the flow of low-risk work.

The lesson is clear: AI's benefit is only realized if the entire project lifecycle matures and progresses alongside it.

Conclusion

So yes, the numbers don't lie: productivity gains of 27% to 133% speaks volumes, making AI feel less like an option and more like a necessity for survival and competition, especially for startups. But facts and experiences reveal a more nuanced reality, one that unveils deeper organizational challenges AI productivity brings.

AI amplifies everything, both your strengths and your weaknesses. It accelerates execution while potentially compromising quality. Most importantly, it floods your organizational workflow with outputs they were not designed to handle, creating bottlenecks that deplete the very gains AI promised.

The path to your small team maximizing the benefits AI brings will not be by adopting it the fastest, but by thoughtfully redesigning your entire operational framework around it. This means evolving from individual productivity to system-wide throughput, and from AI-augmented creation to AI-augmented everything, including review, testing, and quality assurance.

The productivity gains are real, but they are only sustainable if startups are willing to understand the cons and integrate them wisely based on our organizational needs.

References

University of St Andrews. (2024). Adopting AI could boost the productivity of small and medium businesses by up to 133% [Press release]. Retrieved from https://news.st-andrews.ac.uk/archive/adopting-ai-could-boost-the-productivity-of-small-and-medium-businesses-by-up-to-133/

Stack Overflow. (2025). 2025 Stack Overflow Developer Survey. Stack Overflow. Retrieved from https://insights.stackoverflow.com/survey/2025

HackerRank. (2025). 2025 HackerRank Developer Skills Report. HackerRank. Retrieved from https://www.hackerrank.com/research/developer-skills/2025

Liang, J., et al. (2024). The Productivity Effects of AI in Software Engineering: Evidence from a Controlled Trial at Google. arXiv:2410.12944v3 [cs.SE]. https://doi.org/10.48550/arXiv.2410.12944

Wang, Z., et al. (2021). Plan-then-Generate: Controlled Data-to-Text Generation via Planning. arXiv:2108.13740 [cs.CL]. https://arxiv.org/pdf/2108.13740

Dell'Acqua, F., et al. (2023). Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality. Harvard Business School Technology & Operations Mgt. Unit Working Paper No. 24-013. https://www.hbs.edu/ris/Publication%20Files/24-013_b4c5e4e3-0d9c-49f2-b085-d6c34e0f5f6c.pdf

Faros.ai. (2025). The AI Productivity Paradox: AI Coding Assistants Increase Developer Output, But Not Company Productivity (p. 9). Faros AI. http://faros.ai/ai-productivity-paradox

Athena Institute for Tech Economics. (2025). AI Productivity Paradox Report 2025.